All around us, technology is rapidly evolving, and its capabilities are continuing to become a part of everyday life. Advanced communication technologies, such as 5G, have increased the available bandwidth, enabled real-time response and facilitated the connection of millions of devices.

Fueled by the global pandemic, digital transformation has accelerated by several years. Historically, people were responsible for the production of most data, however with the exponential growth of the Internet of Things (IoT), machine and sensor-produced data is quickly becoming the majority. The creation of data is growing and accelerating at exponential rates. It is said that humans are “on a quest to digitize the world.” It is projected that by 2026 our global data volume will exceed 221,000 exabytes. To put this in perspective, this translates to a stack of 221 trillion Blu-ray discs, tall enough to reach the moon 29 times.

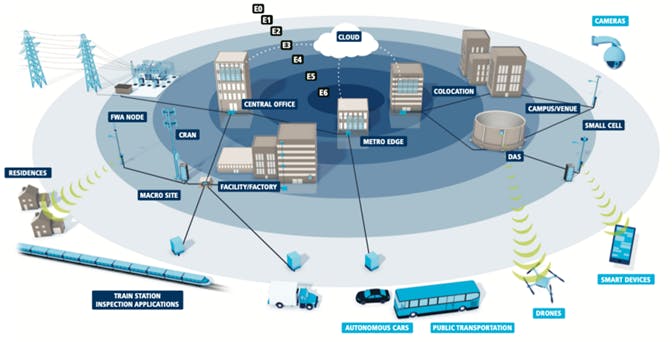

In today’s hyperconnected world, companies need a way to scale and analyze data faster, cheaper and better. The only way to do that is to move out of the cloud and on to the edge of the network, where most of the future data will be generated, analyzed and processed.

Reaching the edge

Edge computing brings processing capabilities closer to the end user device or source of data, which eliminates the journey all the way back to the cloud, thus reducing latency. Latency can be thought of as the currency of the edge, driven primarily by applications requiring near real-time response. For example, autonomous vehicles will not only need to be able to control themselves but must also be able to communicate with the other vehicles around them. They must communicate with the smart city infrastructure to triangulate the vehicle’s position. Imagine connected health applications where 5G-enabled ambulances allow doctors to perform triage on a patient while en route to the hospital. This will require a robust compute architecture at the edge and will only be made possible with significant infrastructure for compute power in the ambulance, along the streets and in the hospital. One other benefit of edge computing is that it reduces the data backhaul to the cloud. This frees up bandwidth and reduces costs.

The edge will require an unprecedented level of connectivity. Positioned on a spectrum between the hyperscale cloud and the device or application edge, this physical compute infrastructure requires a dense fabric of fiber to achieve the necessary level of performance. Today’s flexible ribbon fiber designs, particularly 200µm single-mode cables, are pushing the limits of density-per-square-inch that can be achieved. This translates to the ability to support higher density, less overall weight and a smaller bend radius that enables an easier installation overall.

For data center operators, maximizing the number of connections while minimizing the overall infrastructure footprint—to maximize their bandwidth-per-square-inch—is paramount. This requires more than just fiber and cable innovation. Merging innovations in cable design, high-density fiber management and modularity is the recipe for a true end-to-end solution that provides expandability, flexibility and accessibility across the edge continuum.

Starting at the cloud

At the heart of this continuum is the Public Cloud [E6]. Owned by the hyperscalers, such as Google, Amazon and Microsoft, these massive facilities are typically located in the middle of nowhere. Their facilities demand flexible, scalable, high-performance high-density fiber infrastructure, designed to support the ever-increasing data-hungry applications and customer requirements. Cloud data centers are not on the edge. In fact, the edge begins where the hyperscale data centers end. At these data centers we see latencies of 100ms or more. Most applications today can tolerate that latency, but do you think 100ms latency is good enough to control a fleet of drones, autonomous cars or to perform remote surgery?

Most edge deployments are happening at the Regional Edge today. These are MetroEdge and Colocation [E5] data centers, leveraged by the hyperscalers as points-of-presence (POP) as they are aiming to get closer to the end user. These facilities can be as large as a hyperscale data center. This is also where telco service providers locate their core/interfacing nodes and provide customer connections to their access network—the mobile core. These sites are near peering points and therefore near major cities, which makes them ideal for edge computing. On average, latencies of approximately 50ms can be achieved here.

At the Network Edge, also called the Carrier Edge, most edge nodes will reside in Central Offices [E4]. This is traditionally where telephone lines in a region would meet and be redirected to their destination—the aggregation point. Privately owned by telco service providers, these data center-like environments are found in every locality and are regarded as being at the outer edge of the mobile network. While they have traditionally served fixed line connections, many are now being repurposed into more digital facilities, creating space for edge data centers. Located between the RAN and the core, central offices can achieve latencies of close to 30ms.

To support data collection from the vast amount of IoT devices and sensors, the edge network will also need to extend to office campuses, factories, venues, hospitals and logistic centers at the Enterprise Edge [E3]. On-premise data centers at the Enterprise Edge have existed for some time already and refer to data centers located at the enterprise’s own facilities. They can run a private cloud here, which enables some of the scalability of the public cloud, while being far more secure. Enterprises can also benefit from more customizable infrastructure that is tailored toward the needs of their applications.

Until recently, MetroEdge data centers were thought of as being “the edge.” But that is changing. Micro-data centers, or modular edge data centers, are coming into use today. These data centers are very small, and generally unstaffed facilities that will allow highly localized placement of mission-critical infrastructure. These special-purpose edge data centers may be small enough to fit on the rooftops of commercial buildings, in facility parking lots, on university campuses or near high-traffic locations in major population clusters like sports and entertainment venues.

Varying infrastructure requirements

The infrastructure required for these various types of deployments will differ substantially. Industrial users, for example, will put a premium on reliability and low latency, requiring ruggedized edge solutions that can operate in the harsh environment of a factory floor. They will rely on dedicated communication links, such as private 5G, dedicated WiFi networks or wired connections.

Other use cases present different challenges entirely. Retailers can use edge nodes for a variety of different functionalities, such as combining point-of-sale data together with targeted promotions, tracking foot traffic, and offering self-checkout. The connectivity here could be more traditional, combining WiFi with Bluetooth or other low-power infrastructure.

The Enterprise Edge will require a blend of traditional data center infrastructure, enterprise LAN and even outside plant infrastructure for more of the ruggedized environments. We’re also beginning to see prefabricated edge racks. The AWS Outpost is a good example. These are prefabricated solutions that are able to simply roll into a central office or potentially an Enterprise factory or office, connect in and immediately provide edge compute capability.

As edge computing expands into deeper parts of the network, it could be co-located with Macro Sites, Small Cell/FWA, CRAN and Private LTE [E2] cell nodes. This is where we begin to see a blend of the edge compute with the wireless core. Many edge computing models see cellular base stations as key points to connect end-user devices to the core network with deployment of storage and compute at tower sites. Towers are located at the “last mile”—the final step of the telco service provider networks to their end customers.

The environment here will be vastly different from that of a traditional data center. These are challenging environments that require diversity in infrastructure and focused solutions. Important considerations are the capability to meet strict space constraints as well as the ability to operate in dusty, less-maintained and lower temperature-regulated conditions. Here we begin to see the challenges emerge that extend beyond just solving the connectivity challenge. We need to think about how we solve the power and cooling challenges at these locations.

At the Application Edge, we’re referring to micro-data centers at the customer campus or streetside, such as OSP Cabinets/Fixtures [E1], becoming edge compute nodes. These are designed to be as close to the end user as possible, where latency is lowest and 2 to 5ms latency is achievable. How would we support an edge compute node in an OSP fiber management distribution box that is being installed on a street-side? This space will drive innovation in hybrid power/communications solutions—bringing those together.

Innovations everywhere

Think about optical enclosures that are also able to deal with the thermal and environmental challenges that will be present where these compute nodes are. As applications drive even greater density, is it possible that compute power will at some point be integrated into the actual optical infrastructure, such as aerial and underground splice enclosures? It becomes increasingly clear that not only do we have to solve the backhaul and communication challenge, but also the power challenge; not to mention the site-specific considerations that the variations in environmental conditions will require.

These are innovations that we need to begin thinking about today even though the applications that will require them are not here yet, or at least are not yet mainstream. In the process of building out the OSP networks in metros and rural areas today, we need to think about how we can provision them to allow for edge compute infrastructure to be installed in the future.

Fueled by mankind’s insatiable appetite for data, our modern world is connected by an immense wired and wireless infrastructure—one that will enable transformational and pivotal opportunities for not only those installing the networks, but also the society that is making use of them.

Our quest to digitize the world depends upon a vast infrastructure of fiber and optical connectors coupled with countless panels and cabinets. When the right components are chosen, a truly futureproof network can be achieved—one that can support the transformative applications of our emerging reality. And the edge data center stands at the heart of this emerging reality.

Manja Thessin RCDD/RTPM serves as Enterprise Market Manager for AFL, leading strategic planning and market analysis initiatives. Manja has 21 years of ICT experience in the field, design-and-engineering, and project management. She has managed complex initiatives in Data Center, Education, Industrial/Manufacturing and Healthcare. Manja earned a master’s certificate from Michigan State University in Strategic Leadership and holds RCDD and RTPM certifications from BICSI.

This article first appeared in Cabling Installation & Maintenance.